Will AI take my job?

ai, software, career, future

That is the question everybody asks themselves these days.

Will AI take my Job?

Some would reply with a straight: YES others are in denial. Some think it's a bubble about to burst. I'd say it's a bit of everything.

The case for YES

For sure, some jobs can be performed by AI, some people in Silicon Valley working closer to the advance models not yet released to the general public even go as far as saying "it's over for software developers".

Elon Musk is convinced AI-powered robots will even replace car drivers entirely and even compete with world-class surgeons at surgeries, predicting that autonomous vehicles and robots will ultimately outperform human drivers and surgeons - so what chances do we mere developers have? (source: https://cybernews.com/news/musk-fii-conference-interview-tesla-robots-ai-cybercab-mars-predictions).

Given all the above and continuous improvements in the AI space, coding will no longer be a thing for us to do manually. In a way the industry was already increasing the amount of application behaviour based on configuration over code so this is just trending in the same direction with less code and more configuration - more plumbing. (Small digression this statement is particularity true if you look at the TOP 10 OWASP 2025 list, where app misconfiguration is now rated as #2 on the security risks ranking).

The case for NO

The reality is a bit grayer than it seems, a software developer or engineer does not just "code" all day, if that was the case, many would refer to those people as "coding monkeys". We never wanted to be "coding monkeys", following orders and JFDI-ing it. We have been thought to participate to the full development life-cycle.

So maybe instead of coding as much we will code less, simply because we have better tools that can help us code faster. Our "active" role is changing to a "supervisor" role, but we need to stay in control, and steer the ship the right way. This is why I personally prefer to have a step by step conversation with AI models, instead of letting an AI agent run wild and having to undo everything they just changed.

My main concerns are for junior developers.

There is a reality now where junior developers and new professionals coming into the world of IT in general are not guided properly, and are given a very powerful tool which is AI and coding agents in general that gloss over some important details, simply because they are not able to ask important questions or provide AI with the necessary context, such as:

I need this application to compile

I need the code to pass all tests

I need the code to be secure

I need the configuration to be secure

I need the code to be in line with the company's coding standards

etc

There is nothing like the enthusiasm and energy of a junior developer, when I was a junior myself I was told "Hey slow down, cowboy!" LOL I remember I just wanted to smash as many Tickets as I could, one after the other.

In reality Senior engineers are more concerned about reliability, future proofing solutions, security and test-ability, just to name some characteristics of a well-designed solution, over speed of delivery.

There are already some remedies to this, for example, using AGENTS.md and CONTRIBUTING.md files, these are specialised, vendor-neutral Markdown files placed in the root of a code repository to provide context and instructions to AI coding agents. Unlike README.md (for humans), AGENTS.md acts as a "playbook" or configuration layer that helps AI agents understand project-specific rules, build steps, and conventions, reducing inconsistencies.

For example:

# Project Guidelines

## Commands

- `npm test` - Run tests

- `npm run build` - Build for production

- `npm run lint` - Check code style

## Project Structure

- `src/components/` - React components

- `src/utils/` - Helper functions

- `src/api/` - API client and types

## Code Style

- Use TypeScript for all new files

- Components use PascalCase (e.g., `UserProfile.tsx`)

- Utilities use camelCase (e.g., `formatDate.ts`)

(source: https://ona.com/docs/ona/agents-md)

Having said that there is a level of supervision and critical thinking to be applied to effectively get the most out of AI when building software. For this reason I think our role as software engineers will shift even more on 3 fundamentals aspects:

1) quality assurance: how do we make sure, AI generated code is meeting expectations? At the very minimum we need to have a test plan before we even ask AI to generate a single line of code, or why not try to leverage Test Driven Development techniques.

2) capturing clear requirements: with effective use of our communication abilities, mostly written but also by practising clarity and conciseness.

3) plumbing and architecture: how software fits together in the company's ecosystem and how we deliver it.

In my career I realised early on that if I could not communicate my ideas clearly I could not get my ideas across nor work effectively within a team. So I put a lot of effort into learning English (as a non native English speaker), but also researching and practising effective communication techniques; so I would feel more confident sharing an idea during a team discussion or demoing a feature in front of a mixed technical and non technical audience.

A bubble about to burst?

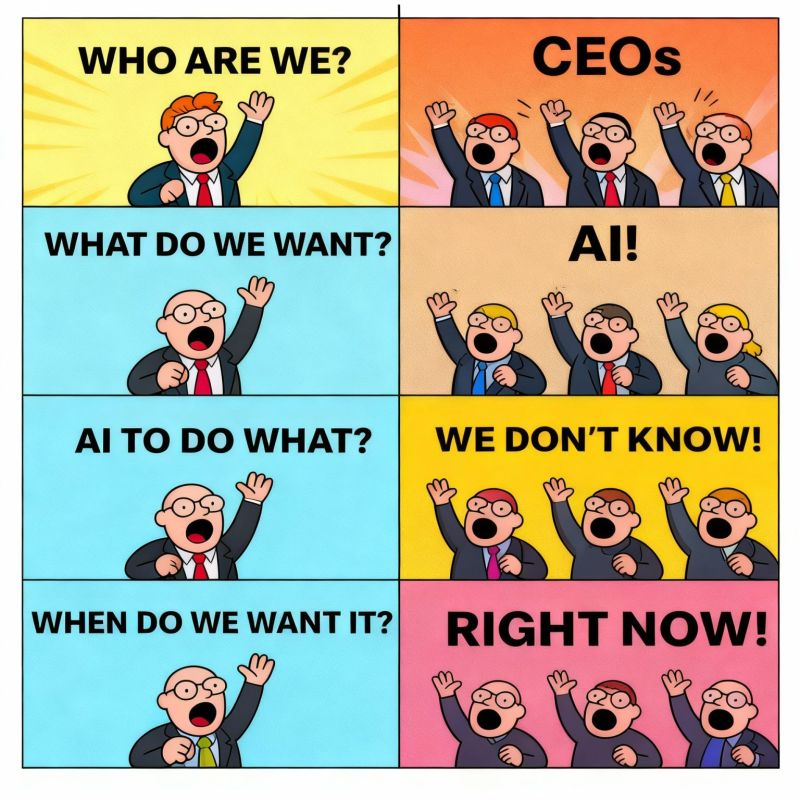

People who think AI is a bubble about to burst usually aren’t saying “AI is useless.” They’re saying the hype has outrun reality. After all how many industry overhauls have we survived so far? If you think about it: OOP, Agile, Functional Programming, No Code Low Code, Big Data, Serverless, MS Teams (just kidding), you name it .... we are still here, still standing.

Two main reasons for this:

1) Expectations are wildly inflated: whether this is coming from people with engineering skills or not they are not in touch with reality. Moreover, in response to all the managers firing developers as of late thinking they can replace them with AI bots - as a software engineer I am disappointed, and this makes me feel underappreciated, for all we do. I expect this trend to hit back like a boomerang when all those AI generated lines of code in production come to be a house on fire situation, we are going to be called to "come back and fix the mess".

2) Costs and infrastructure are unsustainable: the reason these AI infrastructure are standing it's possibly because they are backed by huge corporations with a wild amount of computing power and a wild amount of cash behind them. In reality it's unclear how sustainable as a business model this is in the long run. Also the costs of hardware is skyrocketing plus the impact on Climate Change, are both factors that must be considered... We are only starting. I cannot imagine how bad the situation needs to become before we put a stop to this craziness of data centres built purely to power "prompts".

(source: https://www.lse.ac.uk/granthaminstitute/explainers/what-direct-risks-does-ai-pose-to-the-climate-and-environment/)

Conclusions

In summary, is AI going to take my job? No I don't think so, but AI will take away some of the aspects that made my job enjoyable, which is coding, I don't think coding will fill as much of my day to day as it is currently does, for this reason I must adapt and accept it. For others ready to jump-start a new career I would say: why not. Do it if you feel like you have enough motivation. For example why not transition to DevOps or Security? Those are roles where coding is already minimal (generally speaking) but very sought after roles. Although I would advise against choosing a new career just because you feel like you haven't got a way out. You will only feel frustrated. Instead try and find now something else you enjoy, and gently transition, upskill - we are not there yet - we are not imminently going to extinguish like Dinosaurs.

-- written by a Human